2025

Understanding Biased Representations of People in Algorithmic Decision Making

Kristen M. Scott

KU Leuven 2025

In machine learning (ML) using tabular data the attributes selected to represent individuals are operationalizations of individuals' characteristics and life circumstances. Representations are not replications and there is always a discrepancy between the representation and that which is represented. This thesis expands this premise into an examination of computational representations of social data for decision making including of differing disciplinary approaches to the question of algorithmic harms. (Image: Kokia & Sawyer; https://unsplash.com)

Understanding Biased Representations of People in Algorithmic Decision Making

Kristen M. Scott

KU Leuven 2025

In machine learning (ML) using tabular data the attributes selected to represent individuals are operationalizations of individuals' characteristics and life circumstances. Representations are not replications and there is always a discrepancy between the representation and that which is represented. This thesis expands this premise into an examination of computational representations of social data for decision making including of differing disciplinary approaches to the question of algorithmic harms. (Image: Kokia & Sawyer; https://unsplash.com)

2024

Policy advice and best practices on bias and fairness in AI

Jose M. Alvarez, Alejandra Bringas Colmenarejo, Alaa Elobaid, Simone Fabbrizzi, Miriam Fahimi, Antonio Ferrara, Siamak Ghodsi, Carlos Mougan, Ioanna Papageorgiou, Paula Reyero, Mayra Russo, Kristen M. Scott, Lara State, Xuan Zhao, Salvatore Ruggieri

Ethics and Information Technology 2024

The literature addressing bias and fairness in AI models (fair-AI) is growing at a fast pace, making it difficult for novel researchers and practitioners to have a bird’s-eye view picture of the field. In particular, many policy initiatives, standards, and best practices in fair-AI have been proposed for setting principles, procedures, and knowledge bases to guide and operationalize the management of bias and fairness. The first objective of this paper is to concisely survey the state-of-the-art of fair-AI methods and resources, and the main policies on bias in AI, with the aim of providing such a bird’s-eye guidance for both researchers and practitioners. The second objective of the paper is to contribute to the policy advice and best practices state-of-the-art by leveraging from the results of the NoBIAS research project. We present and discuss a few relevant topics organized around the NoBIAS architecture, which is made up of a Legal Layer, focusing on the European Union context, and a Bias Management Layer, focusing on understanding, mitigating, and accounting for bias. (Image: Yutong Liu; https://betterimagesofai.org; https://creativecommons.org/licenses/by/4.0/)

Policy advice and best practices on bias and fairness in AI

Jose M. Alvarez, Alejandra Bringas Colmenarejo, Alaa Elobaid, Simone Fabbrizzi, Miriam Fahimi, Antonio Ferrara, Siamak Ghodsi, Carlos Mougan, Ioanna Papageorgiou, Paula Reyero, Mayra Russo, Kristen M. Scott, Lara State, Xuan Zhao, Salvatore Ruggieri

Ethics and Information Technology 2024

The literature addressing bias and fairness in AI models (fair-AI) is growing at a fast pace, making it difficult for novel researchers and practitioners to have a bird’s-eye view picture of the field. In particular, many policy initiatives, standards, and best practices in fair-AI have been proposed for setting principles, procedures, and knowledge bases to guide and operationalize the management of bias and fairness. The first objective of this paper is to concisely survey the state-of-the-art of fair-AI methods and resources, and the main policies on bias in AI, with the aim of providing such a bird’s-eye guidance for both researchers and practitioners. The second objective of the paper is to contribute to the policy advice and best practices state-of-the-art by leveraging from the results of the NoBIAS research project. We present and discuss a few relevant topics organized around the NoBIAS architecture, which is made up of a Legal Layer, focusing on the European Union context, and a Bias Management Layer, focusing on understanding, mitigating, and accounting for bias. (Image: Yutong Liu; https://betterimagesofai.org; https://creativecommons.org/licenses/by/4.0/)

Bridging Research and Practice Through Conversation: Reflecting on Our Experience

Mayra Russo, Mackenzie Jorgensen, Kristen M. Scott, Wendy Xu, D.H. Nguyen, Jessie Finocchiaro, Matthew Olckers

Conference on Equity and Access in Algorithms, Mechanisms, and Optimization (EAAMO) 2024

A working group with an ostensible focus on the use of research and quantitative methods for social good reflects on our experience of conducting conversations with practitioners from a range of different backgrounds, including refugee rights, conservation, addiction counseling, and municipal data science. We consider the lessons that emerged and the impact of these conversations on our work, the potential roles we can serve as researchers, and the challenges we anticipate as we move forward in these collaborations. (Image: Kokia & Sawyer; https://unsplash.com)

Bridging Research and Practice Through Conversation: Reflecting on Our Experience

Mayra Russo, Mackenzie Jorgensen, Kristen M. Scott, Wendy Xu, D.H. Nguyen, Jessie Finocchiaro, Matthew Olckers

Conference on Equity and Access in Algorithms, Mechanisms, and Optimization (EAAMO) 2024

A working group with an ostensible focus on the use of research and quantitative methods for social good reflects on our experience of conducting conversations with practitioners from a range of different backgrounds, including refugee rights, conservation, addiction counseling, and municipal data science. We consider the lessons that emerged and the impact of these conversations on our work, the potential roles we can serve as researchers, and the challenges we anticipate as we move forward in these collaborations. (Image: Kokia & Sawyer; https://unsplash.com)

Articulation Work and Tinkering for Fairness in Machine Learning

Miriam Fahimi, Mayra Russo, Kristen M. Scott, Marie-Esther Vidal, Bettina Berendt, Katherina Kinder-Kurlanda

Proceedings of the ACM on Human-Computer Interaction (CSCW) 2024

The field of fair AI aims to counter biased algorithms through computational modelling. However, it faces increasing criticism for perpetuating the use of overly technical and reductionist methods. In this paper, we study the tension between computer science (CS) and socially-oriented and interdisciplinary (SOI) research we observe within the emerging field of fair AI. We draw on the concepts of 'organizational alignment' and 'doability' to discuss how organizational conditions, articulation work, and ambiguities of the social world constrain the doability of SOI research for CS researchers.

Articulation Work and Tinkering for Fairness in Machine Learning

Miriam Fahimi, Mayra Russo, Kristen M. Scott, Marie-Esther Vidal, Bettina Berendt, Katherina Kinder-Kurlanda

Proceedings of the ACM on Human-Computer Interaction (CSCW) 2024

The field of fair AI aims to counter biased algorithms through computational modelling. However, it faces increasing criticism for perpetuating the use of overly technical and reductionist methods. In this paper, we study the tension between computer science (CS) and socially-oriented and interdisciplinary (SOI) research we observe within the emerging field of fair AI. We draw on the concepts of 'organizational alignment' and 'doability' to discuss how organizational conditions, articulation work, and ambiguities of the social world constrain the doability of SOI research for CS researchers.

2023

“We try to empower them” - Exploring Future Technologies to Support Migrant Jobseekers

Sonja Mei Wang, Kristen M. Scott, Margarita Artemenko, Milagros Miceli, Bettina Berendt

Conference on Fairness, Accountability, and Transparency (FAccT) 2023

Previous work on technology in Public Employment Services and job market chances has focused on profiling systems that are intended for tasks such as assessing and classifying jobseekers. To integrate into the local job market, migrants and refugees seek support from the Public Employment Services (PES), but also non-profit, non-governmental organizations (herein referred to as third sector organizations, or TSOs). How do design visions for technologies to support jobseekers change when developed not under bureaucratic rules but by people interacting directly and informally with jobseekers? We focus on the perspectives of TSO workers assisting migrants and refugees seeking support for their job search. Through interviews and a design fiction exercise, we investigate (1) the role of TSO workers, (2) factors beyond those used in profiling systems that they consider relevant, and (3) their ideal technology. We describe how TSO workers contextualize formal criteria used in profiling systems while prioritising jobseekers’ personal interests and strengths. Based on our findings on existing tools and methods, and imagined future technologies, we propose a software-based project that expands existing job taxonomies into a coordinated resource combining job characteristics, required competencies, and soft skills to support multiple informational tools for jobseekers.

“We try to empower them” - Exploring Future Technologies to Support Migrant Jobseekers

Sonja Mei Wang, Kristen M. Scott, Margarita Artemenko, Milagros Miceli, Bettina Berendt

Conference on Fairness, Accountability, and Transparency (FAccT) 2023

Previous work on technology in Public Employment Services and job market chances has focused on profiling systems that are intended for tasks such as assessing and classifying jobseekers. To integrate into the local job market, migrants and refugees seek support from the Public Employment Services (PES), but also non-profit, non-governmental organizations (herein referred to as third sector organizations, or TSOs). How do design visions for technologies to support jobseekers change when developed not under bureaucratic rules but by people interacting directly and informally with jobseekers? We focus on the perspectives of TSO workers assisting migrants and refugees seeking support for their job search. Through interviews and a design fiction exercise, we investigate (1) the role of TSO workers, (2) factors beyond those used in profiling systems that they consider relevant, and (3) their ideal technology. We describe how TSO workers contextualize formal criteria used in profiling systems while prioritising jobseekers’ personal interests and strengths. Based on our findings on existing tools and methods, and imagined future technologies, we propose a software-based project that expands existing job taxonomies into a coordinated resource combining job characteristics, required competencies, and soft skills to support multiple informational tools for jobseekers.

Domain Adaptive Decision Trees: Implications for Accuracy and Fairness

Jose M. Alvarez, Kristen M. Scott, Salvatore Ruggieri, Bettina Berendt

Conference on Fairness, Accountability, and Transparency (FAccT) 2023

In uses of pre-trained machine learning models, it is a known issue that the target population in which the model is being deployed may not have been reflected in the source population with which the model was trained. This can result in a biased model when deployed, leading to a reduction in model performance. One risk is that, as the population changes, certain demographic groups will be under-served or otherwise disadvantaged by the model, even as they become more represented in the target population. The field of domain adaptation proposes techniques for a situation where label data for the target population does not exist, but some information about the target distribution does exist. In this paper we contribute to the domain adaptation literature by introducing domain-adaptive decision trees (DADT). We focus on decision trees given their growing popularity due to their interpretability and performance relative to other more complex models. With DADT we aim to improve the accuracy of models trained in a source domain (or training data) that differs from the target domain (or test data). We propose an in-processing step that adjusts the information gain split criterion with outside information corresponding to the distribution of the target population. We demonstrate DADT on real data and find that it improves accuracy over a standard decision tree when testing in a shifted target population. We also study the change in fairness under demographic parity and equal opportunity. Results show an improvement in fairness with the use of DADT.

Domain Adaptive Decision Trees: Implications for Accuracy and Fairness

Jose M. Alvarez, Kristen M. Scott, Salvatore Ruggieri, Bettina Berendt

Conference on Fairness, Accountability, and Transparency (FAccT) 2023

In uses of pre-trained machine learning models, it is a known issue that the target population in which the model is being deployed may not have been reflected in the source population with which the model was trained. This can result in a biased model when deployed, leading to a reduction in model performance. One risk is that, as the population changes, certain demographic groups will be under-served or otherwise disadvantaged by the model, even as they become more represented in the target population. The field of domain adaptation proposes techniques for a situation where label data for the target population does not exist, but some information about the target distribution does exist. In this paper we contribute to the domain adaptation literature by introducing domain-adaptive decision trees (DADT). We focus on decision trees given their growing popularity due to their interpretability and performance relative to other more complex models. With DADT we aim to improve the accuracy of models trained in a source domain (or training data) that differs from the target domain (or test data). We propose an in-processing step that adjusts the information gain split criterion with outside information corresponding to the distribution of the target population. We demonstrate DADT on real data and find that it improves accuracy over a standard decision tree when testing in a shifted target population. We also study the change in fairness under demographic parity and equal opportunity. Results show an improvement in fairness with the use of DADT.

2022

Fairness in Agreement With European Values: An Interdisciplinary Perspective on AI Regulation

Alejandra Bringas Colmenarejo, Luca Nannini, Alisa Rieger, Kristen M Scott, Xuan Zhao, Gourab K Patro, Gjergji Kasneci, Katharina Kinder-Kurlanda

Conference on Fairness, Accountability, and Transparency (FAccT) 2022

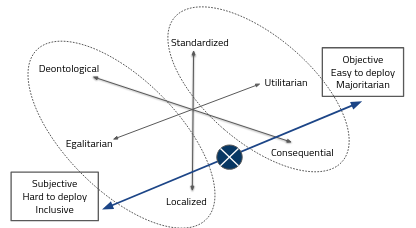

With increasing digitalization, Artificial Intelligence (AI) is becoming ubiquitous. AI-based systems to identify, optimize, automate, and scale solutions to complex economic and societal problems are being proposed and implemented. This has motivated regulation efforts, including the Proposal of an EU AI Act. This interdisciplinary position paper considers various concerns surrounding fairness and discrimination in AI, and discusses how AI regulations address them, focusing on (but not limited to) the Proposal. We first look at AI and fairness through the lenses of law, (AI) industry, sociotechnology, and (moral) philosophy, and present various perspectives. Then, we map these perspectives along three axes of interests: (i) Standardization vs. Localization, (ii) Utilitarianism vs. Egalitarianism, and (iii) Consequential vs. Deontological ethics which leads us to identify a pattern of common arguments and tensions between these axes. Positioning the discussion within the axes of interest and with a focus on reconciling the key tensions, we identify and propose the roles AI Regulation should take to make the endeavor of the AI Act a success in terms of AI fairness concerns.

Fairness in Agreement With European Values: An Interdisciplinary Perspective on AI Regulation

Alejandra Bringas Colmenarejo, Luca Nannini, Alisa Rieger, Kristen M Scott, Xuan Zhao, Gourab K Patro, Gjergji Kasneci, Katharina Kinder-Kurlanda

Conference on Fairness, Accountability, and Transparency (FAccT) 2022

With increasing digitalization, Artificial Intelligence (AI) is becoming ubiquitous. AI-based systems to identify, optimize, automate, and scale solutions to complex economic and societal problems are being proposed and implemented. This has motivated regulation efforts, including the Proposal of an EU AI Act. This interdisciplinary position paper considers various concerns surrounding fairness and discrimination in AI, and discusses how AI regulations address them, focusing on (but not limited to) the Proposal. We first look at AI and fairness through the lenses of law, (AI) industry, sociotechnology, and (moral) philosophy, and present various perspectives. Then, we map these perspectives along three axes of interests: (i) Standardization vs. Localization, (ii) Utilitarianism vs. Egalitarianism, and (iii) Consequential vs. Deontological ethics which leads us to identify a pattern of common arguments and tensions between these axes. Positioning the discussion within the axes of interest and with a focus on reconciling the key tensions, we identify and propose the roles AI Regulation should take to make the endeavor of the AI Act a success in terms of AI fairness concerns.

Algorithmic Tools in Public Employment Services: Towards a Jobseeker-Centric Perspective

Kristen M. Scott, Sonja Mei Wang, Milagros Miceli, Pieter Delobelle, Karolina Sztandar-Sztanderska, Bettina Berendt

Conference on Fairness, Accountability, and Transparency (FAccT) 2022

Data-driven and algorithmic systems have been introduced to support Public Employment Services (PES) throughout Europe, and globally, and have often been met with critique and controversy. Here we draw attention to the needs and expectations of people directly affected by these systems, i.e., jobseekers. We argue that the limitations and risks of current systems cannot be addressed through minor adjustments but require a more fundamental change to the role of PES, and algorithmic systems within it.

Algorithmic Tools in Public Employment Services: Towards a Jobseeker-Centric Perspective

Kristen M. Scott, Sonja Mei Wang, Milagros Miceli, Pieter Delobelle, Karolina Sztandar-Sztanderska, Bettina Berendt

Conference on Fairness, Accountability, and Transparency (FAccT) 2022

Data-driven and algorithmic systems have been introduced to support Public Employment Services (PES) throughout Europe, and globally, and have often been met with critique and controversy. Here we draw attention to the needs and expectations of people directly affected by these systems, i.e., jobseekers. We argue that the limitations and risks of current systems cannot be addressed through minor adjustments but require a more fundamental change to the role of PES, and algorithmic systems within it.

2020

“Human, All Too Human”: NOAA Weather Radio and the Emotional Impact of Synthetic Voices

Kristen M. Scott, Simone Ashby, Julian Hanna

Conference on Human Factors in Computing Systems (CHI) 2020

The integration of text-to-speech into an open technology stack for low-power FM community radio stations is an opportunity to automate laborious processes and increase accessibility to information in remote communities. However, there are open questions as to the perceived contrast of synthetic voices with the local and intimate format of community radio. This paper presents an exploratory focus group on the topic, followed by a thematic analysis of public comments on YouTube videos of the synthetic voices used for broadcasting by National Oceanic and Atmospheric Administration (NOAA) Weather Radio. We find that despite observed reservations about the suitability of TTS for radio, there is significant evidence of anthropomorphism, nostalgia and emotional connection in relation to these voices. Additionally, introduction of a more "human sounding" synthetic voice elicited significant negative feedback. We identify pronunciation, speed, suitability to content and acknowledgment of limitations as more relevant factors in listeners' stated sense of connection.

“Human, All Too Human”: NOAA Weather Radio and the Emotional Impact of Synthetic Voices

Kristen M. Scott, Simone Ashby, Julian Hanna

Conference on Human Factors in Computing Systems (CHI) 2020

The integration of text-to-speech into an open technology stack for low-power FM community radio stations is an opportunity to automate laborious processes and increase accessibility to information in remote communities. However, there are open questions as to the perceived contrast of synthetic voices with the local and intimate format of community radio. This paper presents an exploratory focus group on the topic, followed by a thematic analysis of public comments on YouTube videos of the synthetic voices used for broadcasting by National Oceanic and Atmospheric Administration (NOAA) Weather Radio. We find that despite observed reservations about the suitability of TTS for radio, there is significant evidence of anthropomorphism, nostalgia and emotional connection in relation to these voices. Additionally, introduction of a more "human sounding" synthetic voice elicited significant negative feedback. We identify pronunciation, speed, suitability to content and acknowledgment of limitations as more relevant factors in listeners' stated sense of connection.